Further to my previous blog on GO, I started investigating further - best way to write APIs. I am convinced, moving further there will not be monopoly of one language. The onus will be on us to pick language that best solves the problem. We should widen our horizon and should think out of box.

REST has redefined the way we have been interacting with an application. But REST is not an answer to all problems in this world of distributed computing (cloud), where APIs are distributed - coded in different languages (Agile world). Consider an example of a PSP (Payment Service Provider) or Insurance system. Systems like these are built using micro services (REST APIs) i.e are broken into self contained APIs. Excellent!!! But the real business of PSP or Insurance lies in calling these APIs to implement business processes (payment engine, claim processing etc ...) . This business process generate revenue/money for a company. Its very difficult to model these business flows in REST APIs (noun, verb) paradigms for reasons explained in blog - Why we have decided to move our APIs to gRPC

We are in the era of cloud-native applications where Microservices should be able to massively scale and performance is utmost critical. Plus its a need of the hour to have a high performance communication mechanism to communicate between various Microservices. Then the big question is whether JSON based APIs provide high performance and scalability power which is required for modern applications. Is JSON really a fast data format for exchanging data between applications?. Is RESTful architecture capable of building complex APIs?. Can we easily build a bidirectional stream APIs with RESTful architecture?. The HTTP/2 protocol provides lots of capability than its previous version, thus it's high time we leverage these capabilities when building next-generation APIs. Let's investigate how gRPC and protocol buffers helps in it.

Protocol Buffers, also referred as protobuf, is Google’s language-neutral, platform-neutral, extensible mechanism for serializing structured data. Protocol Buffers are smaller, faster, and simpler that provides high performance than other standards such as XML and JSON.

gRPC is a modern, open source remote procedure call (RPC) framework that can run anywhere. It enables client and server applications to communicate transparently, and makes it easier to build connected systems. gRPC is developed by Google. gRPC using HTTP 2.0 hence follows HTTP semantics. It supports both sync and async communication model. It supports classical Request/Response model and my personal favorite bidirectional streams. gRPC is capable of supporting full-duplex streaming. As a result, both client and server applications can send stream of data asynchronously.

By default, gRPC uses Protocol Buffers as the Interface Definition Language (IDL) and as its underlying message interchange format. But IDL is pluggable and different IDL can be plugged in. Unlike traditional JSON and XML, Protocol Buffers are not just message interchange format, it’s also used for describing the service interfaces (service endpoints). Thus Protocol Buffers are used for both the service interface and the structure of the payload messages. In gRPC, one can define services and its methods along with payload messages. Like a typical communication between a client application and a RPC system, a gRPC client application can directly call methods on a remote server as if it was a local object in a client application.

Now the real fun - let's get our hands in mud - gRPC + proto + GO/Java :-) to demonstrate concepts we just learnt, by building "Greeter" service. I am becoming polyglot programmer :-)

A typical gRPC service can be built in three steps as shown below:

Step 1: Define Service Definition (proto) & Generate

The IDL (proto) for "Greeter" looks like:

For GO language

syntax = "proto3";

package greeter;

// The greeting service definition.

service Greeter {

// Sends a greeting

rpc SayHello (HelloRequest) returns (HelloReply) {}

}

// The request message containing the user's name.

message HelloRequest {

string name = 1;

}

// The response message containing the greetings

message HelloReply {

string message = 1;

}

For Java language

syntax = "proto3";

option java_multiple_files = true;

option java_package = "com.gmalhotr.grpc.greeter.api";

option java_outer_classname = "HelloWorld";

option objc_class_prefix = "GREETER";

package greeter;

// The greeting service definition.

service Greeter {

// Sends a greeting

rpc SayHello (HelloRequest) returns (HelloReply) {}

}

// The request message containing the user's name.

message HelloRequest {

string name = 1;

}

// The response message containing the greetings

message HelloReply {

string message = 1;

}

Generate Server and Client using protoc (for go) and using maven Protocol Buffer Plugin

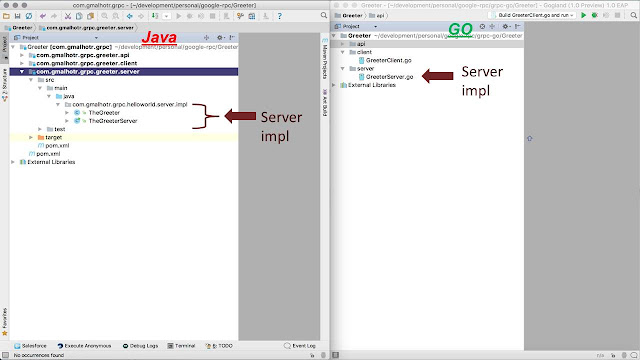

For this application, I chose Intellij. Following picture shows above artifacts in the project layout:

Step 2: Create Server and Client

Server in GO

package main

import (

"log"

"net"

"golang.org/x/net/context"

"google.golang.org/grpc"

pb "../api"

"google.golang.org/grpc/reflection"

)

const (

port = ":50051"

)

// server is used to implement interfacedef.GreeterServer.

type server struct{}

// Define method for a server - SayHello implements api.GreeterServer

func (server *server) SayHello(ctx context.Context, in *pb.HelloRequest) (*pb.HelloReply, error) {

return &pb.HelloReply{Message: "Warm greeting " + in.Name + " ...."}, nil

}

func main() {

lis, err := net.Listen("tcp", port)

if err != nil {

log.Fatalf("failed to listen: %v", err)

}

s := grpc.NewServer()

pb.RegisterGreeterServer(s, &server{})

// Register reflection service on gRPC server.

reflection.Register(s)

if err := s.Serve(lis); err != nil {

log.Fatalf("failed to serve: %v", err)

}

}

Server in Java

package com.gmalhotr.grpc.helloworld.server.impl;

import com.gmalhotr.grpc.greeter.api.GreeterGrpc;

import java.io.IOException;

import java.util.concurrent.CompletableFuture;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import io.grpc.Server;

import io.grpc.netty.NettyServerBuilder;

public class TheGreeterServer {

private static final Logger LOG = LoggerFactory.getLogger(TheGreeterServer.class);

private final int port = 12345;

private Server server;

/**

* Main launches the server from the command line.

*/

public static void main(String[] args) throws IOException, InterruptedException {

final TheGreeterServer server = new TheGreeterServer();

server.start();

server.blockUntilShutdown();

}

private void blockUntilShutdown() {

if (server != null) {

server.shutdown();

}

}

private void start() {

try {

server = NettyServerBuilder

.forPort(port)

.addService(GreeterGrpc.bindService(new TheGreeter()))

.build()

.start();

LOG.info("Server started, listening on {}", port);

CompletableFuture.runAsync(() -> {

try {

server.awaitTermination();

} catch (InterruptedException ex) {

LOG.error(ex.getMessage(), ex);

}

});

Runtime.getRuntime().addShutdownHook(new Thread() {

@Override

public void run() {

// Use stderr here since the logger may have been reset by its JVM shutdown hook.

System.err.println("*** shutting down gRPC server since JVM is shutting down");

TheGreeterServer.this.stop();

System.err.println("*** server shut down");

}

});

System.err.println("*** server started");

} catch (IOException ex) {

LOG.error(ex.getMessage(), ex);

}

}

private void stop() {

if (server != null) {

server.shutdown();

}

}

}

Below is the implementation for the "TheGreeter" which sends greeting to client:

package com.gmalhotr.grpc.helloworld.server.impl;

import com.gmalhotr.grpc.greeter.api.GreeterGrpc;

import com.gmalhotr.grpc.greeter.api.HelloReply;

import com.gmalhotr.grpc.greeter.api.HelloRequest;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import io.grpc.stub.StreamObserver;

public class TheGreeter implements GreeterGrpc.Greeter { // extends GreeterGrpc.AbstractGreeter implements BindableService {

private static final Logger LOG = LoggerFactory.getLogger(TheGreeter.class);

@Override

public void sayHello(HelloRequest request, StreamObserver responseObserver) {

LOG.info("sayHello endpoint received request from " + request.getName());

HelloReply reply = HelloReply.newBuilder().setMessage("Hello " + request.getName()).build();

responseObserver.onNext(reply);

responseObserver.onCompleted();

}

}

Below picture shows Server and Client in the project layout in IDE:

Step 3: Execute

Start the Server first. Run the Client and following output will appear:

2017/04/23 16:58:07 Greeting: Warm greeting gaurav ...

Conclusions

gRPC provides a very simple mechanism to perform RPC in language and platform neutral way. Its very simple (unlike EJB, CORBAs, Webhook .... etc...) and runs using HTTP 2.0. As the saying goes - seeing is believing. Give a look at site - HTTP 2 Demo, to witness performance boost using HTTP 2. With the rise of Cloud (distributed computing), I strongly believe, the future lies in polyglot programming. Use the language which best solves the problem. gRPC can be used to implement complex flows.

Also it's worth mentioning here that amount of code that I had to write in GO for Greeter was way less as compared to Java.